The place where your backups will be saved is called a “repository”.This chapter explains how to create (“init”) such a repository. The repositorycan be stored locally, or on some remote server or service. We’ll first coverusing a local repository; the remaining sections of this chapter cover all theother options. You can skip to the next chapter once you’ve read the relevantsection here.

For automated backups, restic accepts the repository location in theenvironment variable RESTIC_REPOSITORY. Restic can also read the repositorylocation from a file specified via the --repository-file option or theenvironment variable RESTIC_REPOSITORY_FILE. For the password, severaloptions exist:

Rclone ('rsync for cloud storage') is a command line program to sync files and directories to and from different cloud storage providers. Storage providers. 1Fichier 📄 Alibaba Cloud (Aliyun) Object Storage System (OSS) 📄 Amazon Drive 📄 Amazon S3 📄 Backblaze B2 📄 Box 📄 Ceph 📄 Citrix ShareFile 📄 DigitalOcean Spaces. Restic takes care of starting and stopping rclone. As a more concrete example, suppose you have configured a remote named b2prod for Backblaze B2 with rclone, with a bucket called yggdrasil. You can then use rclone to list files in the bucket like this: $. Rclone 'rsync for cloud storage' is a powerful command line tool to copy and sync files to and from local disk, SFTP servers, and many cloud storage providers. Rclone's Backblaze B2 page has many examples of configuration and options. Supports Fireball.

- Setting the environment variable

RESTIC_PASSWORD - Specifying the path to a file with the password via the option

--password-fileor the environment variableRESTIC_PASSWORD_FILE - Configuring a program to be called when the password is needed via theoption

--password-commandor the environment variableRESTIC_PASSWORD_COMMAND

Local¶

In order to create a repository at /srv/restic-repo, run the followingcommand and enter the same password twice:

Warning

Remembering your password is important! If you lose it, you won’t beable to access data stored in the repository.

Warning

On Linux, storing the backup repository on a CIFS (SMB) share is notrecommended due to compatibility issues. Either use another backendor set the environment variable GODEBUG to asyncpreemptoff=1.Refer to GitHub issue #2659 for further explanations.

SFTP¶

In order to backup data via SFTP, you must first set up a server withSSH and let it know your public key. Passwordless login is reallyimportant since restic fails to connect to the repository if the serverprompts for credentials.

Once the server is configured, the setup of the SFTP repository cansimply be achieved by changing the URL scheme in the init command:

You can also specify a relative (read: no slash (/) character at thebeginning) directory, in this case the dir is relative to the remoteuser’s home directory.

Also, if the SFTP server is enforcing domain-confined users, you canspecify the user this way: user@domain@host.

Note

Please be aware that sftp servers do not expand the tilde character(~) normally used as an alias for a user’s home directory. If youwant to specify a path relative to the user’s home directory, pass arelative path to the sftp backend.

If you need to specify a port number or IPv6 address, you’ll need to useURL syntax. E.g., the repository /srv/restic-repo on [::1] (localhost)at port 2222 with username user can be specified as

Note the double slash: the first slash separates the connection settings fromthe path, while the second is the start of the path. To specify a relativepath, use one slash.

Alternatively, you can create an entry in the ssh configuration file,usually located in your home directory at ~/.ssh/config or in/etc/ssh/ssh_config:

Then use the specified host name foo normally (you don’t need tospecify the user name in this case):

You can also add an entry with a special host name which does not exist,just for use with restic, and use the Hostname option to set thereal host name:

Then use it in the backend specification:

Last, if you’d like to use an entirely different program to create theSFTP connection, you can specify the command to be run with the option-osftp.command='foobar'.

Note

Please be aware that sftp servers close connections when no data isreceived by the client. This can happen when restic is processing hugeamounts of unchanged data. To avoid this issue add the following linesto the client’s .ssh/config file:

REST Server¶

In order to backup data to the remote server via HTTP or HTTPS protocol,you must first set up a remote RESTserver instance. Once theserver is configured, accessing it is achieved by changing the URLscheme like this:

Depending on your REST server setup, you can use HTTPS protocol,password protection, multiple repositories or any combination ofthose features. The TCP/IP port is also configurable. Hereare some more examples:

If you use TLS, restic will use the system’s CA certificates to verify theserver certificate. When the verification fails, restic refuses to proceed andexits with an error. If you have your own self-signed certificate, or a customCA certificate should be used for verification, you can pass restic thecertificate filename via the --cacert option. It will then verify that theserver’s certificate is contained in the file passed to this option, or signedby a CA certificate in the file. In this case, the system CA certificates arenot considered at all.

REST server uses exactly the same directory structure as local backend,so you should be able to access it both locally and via HTTP, evensimultaneously.

Amazon S3¶

Restic can backup data to any Amazon S3 bucket. However, in this case,changing the URL scheme is not enough since Amazon uses special securitycredentials to sign HTTP requests. By consequence, you must first setupthe following environment variables with the credentials you obtainedwhile creating the bucket.

You can then easily initialize a repository that uses your Amazon S3 asa backend. If the bucket does not exist it will be created in thedefault location:

If needed, you can manually specify the region to use by either setting theenvironment variable AWS_DEFAULT_REGION or calling restic with an optionparameter like -os3.region='us-east-1'. If the region is not specified,the default region is used. Afterwards, the S3 server (at least for AWS,s3.amazonaws.com) will redirect restic to the correct endpoint.

Until version 0.8.0, restic used a default prefix of restic, so the filesin the bucket were placed in a directory named restic. If you want toaccess a repository created with an older version of restic, specify the pathafter the bucket name like this:

For an S3-compatible server that is not Amazon (like Minio, see below),or is only available via HTTP, you can specify the URL to the serverlike this: s3:http://server:port/bucket_name.

Note

restic expects path-style URLslike for example s3.us-west-2.amazonaws.com/bucket_name.Virtual-hosted–style URLs like bucket_name.s3.us-west-2.amazonaws.com,where the bucket name is part of the hostname are not supported. These mustbe converted to path-style URLs instead, for example s3.us-west-2.amazonaws.com/bucket_name.

Note

Certain S3-compatible servers do not properly implement theListObjectsV2 API, most notably Ceph versions before v14.2.5. On thesebackends, as a temporary workaround, you can provide the-os3.list-objects-v1=true option to use the olderListObjects API instead. This option may be removed in futureversions of restic.

Minio Server¶

Minio is an Open Source Object Storage,written in Go and compatible with AWS S3 API.

- Download and Install MinioServer.

- You can also refer to https://docs.minio.io for step by step guidanceon installation and getting started on Minio Client and Minio Server.

You must first setup the following environment variables with thecredentials of your Minio Server.

Rclone Backblaze Unraid

Now you can easily initialize restic to use Minio server as a backend withthis command.

Wasabi¶

Wasabi is a low cost AWS S3 conformant object storage provider.Due to it’s S3 conformance, Wasabi can be used as a storage provider for a restic repository.

- Create a Wasabi bucket using the Wasabi Console.

- Determine the correct Wasabi service URL for your bucket here.

You must first setup the following environment variables with thecredentials of your Wasabi account.

Now you can easily initialize restic to use Wasabi as a backend withthis command.

Alibaba Cloud (Aliyun) Object Storage System (OSS)¶

Alibaba OSS is anencrypted, secure, cost-effective, and easy-to-use object storageservice that enables you to store, back up, and archive large amountsof data in the cloud.

Alibaba OSS is S3 compatible so it can be used as a storage providerfor a restic repository with a couple of extra parameters.

- Determine the correct Alibaba OSS region endpoint - this will be something like

oss-eu-west-1.aliyuncs.com - You’ll need the region name too - this will be something like

oss-eu-west-1

You must first setup the following environment variables with thecredentials of your Alibaba OSS account.

Now you can easily initialize restic to use Alibaba OSS as a backend withthis command.

For example with an actual endpoint:

OpenStack Swift¶

Restic can backup data to an OpenStack Swift container. Because Swift supportsvarious authentication methods, credentials are passed through environmentvariables. In order to help integration with existing OpenStack installations,the naming convention of those variables follows the official Python Swift client:

Restic should be compatible with an OpenStack RC filein most cases.

Once environment variables are set up, a new repository can be created. Thename of the Swift container and optional path can be specified. Ifthe container does not exist, it will be created automatically:

The policy of the new container created by restic can be changed using environment variable:

Backblaze B2¶

Restic can backup data to any Backblaze B2 bucket. You need to first setup thefollowing environment variables with the credentials you can find in thedashboard on the “Buckets” page when signed into your B2 account:

Note

As of version 0.9.2, restic supports both master and non-master application keys. If using a non-master application key, ensure that it is created with at least read and write access to the B2 bucket. On earlier versions of restic, a master application key is required.

You can then initialize a repository stored at Backblaze B2. If thebucket does not exist yet and the credentials you passed to restic have theprivilege to create buckets, it will be created automatically:

Note that the bucket name must be unique across all of B2.

The number of concurrent connections to the B2 service can be set with the -ob2.connections=10 switch. By default, at most five parallel connections areestablished.

Microsoft Azure Blob Storage¶

You can also store backups on Microsoft Azure Blob Storage. Export the Azureaccount name and key as follows:

Afterwards you can initialize a repository in a container called foo in theroot path like this:

The number of concurrent connections to the Azure Blob Storage service can be set with the-oazure.connections=10 switch. By default, at most five parallel connections areestablished.

Google Cloud Storage¶

Restic supports Google Cloud Storage as a backend and connects via a service account.

For normal restic operation, the service account must have thestorage.objects.{create,delete,get,list} permissions for the bucket. Theseare included in the “Storage Object Admin” role.resticinit can create the repository bucket. Doing so requires thestorage.buckets.create permission (“Storage Admin” role). If the bucketalready exists, that permission is unnecessary.

To use the Google Cloud Storage backend, first create a service account keyand download the JSON credentials file.Second, find the Google Project ID that you can see in the Google CloudPlatform console at the “Storage/Settings” menu. Export the path to the JSONkey file and the project ID as follows:

Restic uses Google’s client library to generate default authentication material,which means if you’re running in Google Container Engine or are otherwiselocated on an instance with default service accounts then these should work out ofthe box.

Alternatively, you can specify an existing access token directly:

If GOOGLE_ACCESS_TOKEN is set all other authentication mechanisms aredisabled. The access token must have at least thehttps://www.googleapis.com/auth/devstorage.read_write scope. Keep in mindthat access tokens are short-lived (usually one hour), so they are not suitableif creating a backup takes longer than that, for instance.

Once authenticated, you can use the gs: backend type to create a newrepository in the bucket foo at the root path:

The number of concurrent connections to the GCS service can be set with the-ogs.connections=10 switch. By default, at most five parallel connections areestablished.

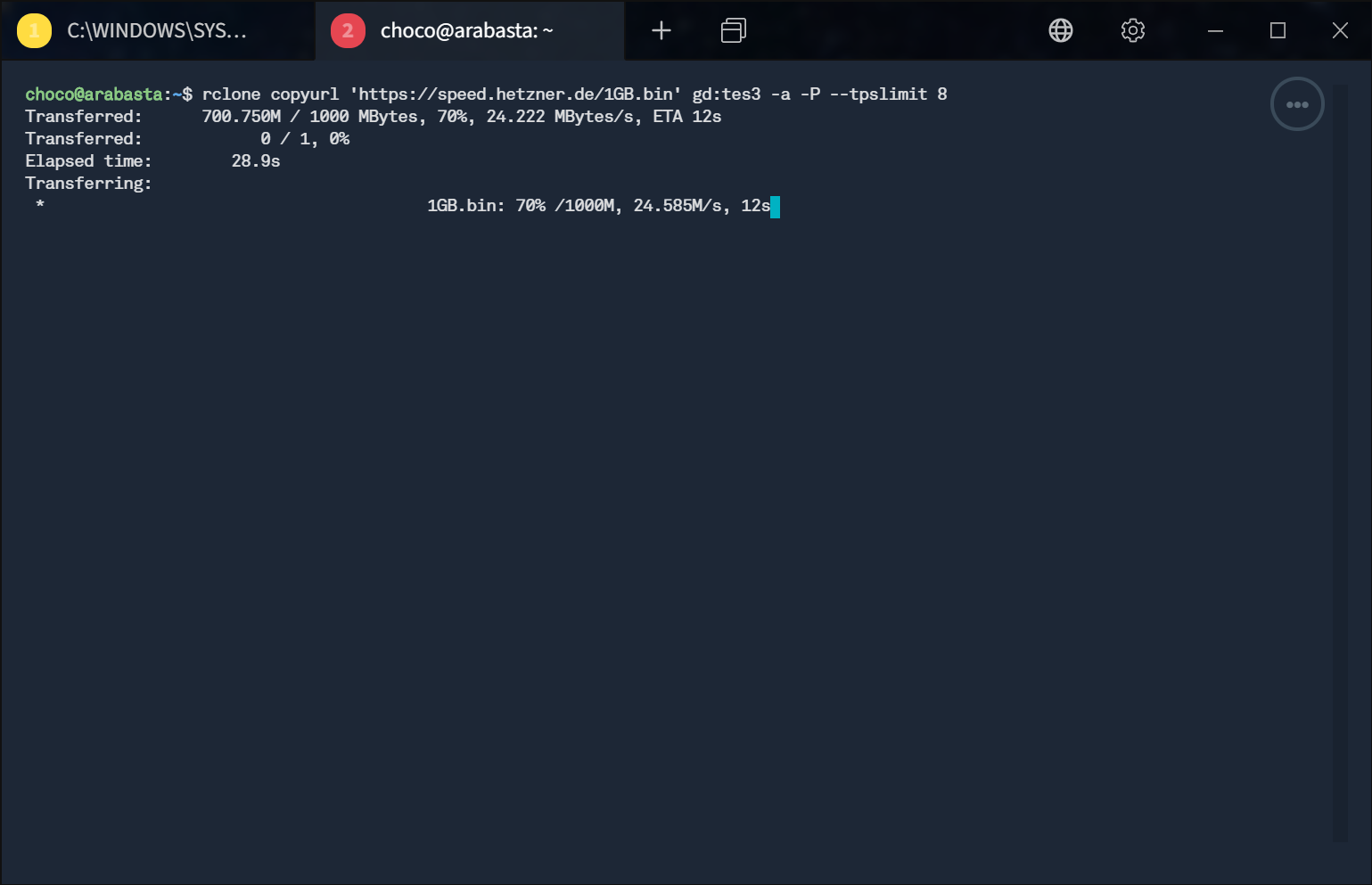

Other Services via rclone¶

The program rclone can be used to access many other different services andstore data there. First, you need to install and configure rclone. Thegeneral backend specification format is rclone:<remote>:<path>, the<remote>:<path> component will be directly passed to rclone. When youconfigure a remote named foo, you can then call restic as follows toinitiate a new repository in the path bar in the repo:

Restic takes care of starting and stopping rclone.

As a more concrete example, suppose you have configured a remote namedb2prod for Backblaze B2 with rclone, with a bucket called yggdrasil.You can then use rclone to list files in the bucket like this:

In order to create a new repository in the root directory of the bucket, callrestic like this:

If you want to use the path foo/bar/baz in the bucket instead, pass this torestic:

Listing the files of an empty repository directly with rclone should return alisting similar to the following:

Rclone can be configured with environment variables, so for instanceconfiguring a bandwidth limit for rclone can be achieved by setting theRCLONE_BWLIMIT environment variable:

For debugging rclone, you can set the environment variable RCLONE_VERBOSE=2.

The rclone backend has two additional options:

-orclone.programspecifies the path to rclone, the default value is justrclone-orclone.argsallows setting the arguments passed to rclone, by default this isserverestic--stdio--b2-hard-delete

The reason for the --b2-hard-delete parameters can be found in the corresponding GitHub issue #1657.

In order to start rclone, restic will build a list of arguments by joining thefollowing lists (in this order): rclone.program, rclone.args and as thelast parameter the value that follows the rclone: prefix of the repositoryspecification.

So, calling restic like this

runs rclone as follows:

Manually setting rclone.program also allows running a remote instance ofrclone e.g. via SSH on a server, for example:

With these options, restic works with local files. It uses rclone andcredentials stored on remotehost to communicate with B2. All data (exceptcredentials) is encrypted/decrypted locally, then sent/received viaremotehost to/from B2.

A more advanced version of this setup forbids specific hosts from removingfiles in a repository. See the blog post by Simon Ruderichfor details.

The rclone command may also be hard-coded in the SSH configuration or theuser’s public key, in this case it may be sufficient to just start the SSHconnection (and it’s irrelevant what’s passed after rclone: in therepository specification):

Password prompt on Windows¶

At the moment, restic only supports the default Windows consoleinteraction. If you use emulation environments likeMSYS2 orCygwin, which use terminals likeMintty or rxvt, you may get a password error.

You can workaround this by using a special tool called winpty (lookhere andhere for detail information).On MSYS2, you can install winpty as follows:

Script was adapted from the one posted in the FreeNAS community forums by Martin Aspeli: https://www.ixsystems.com/community...2-backup-solution-instead-of-crashplan.58423/

Why? Freenas (v11.1 onwards) now supports rclone nativetly and also has GUI entries for Cloudsync, however there is no option to use additional parameters within the GUI, thus I have adapted a script to be run instead via a cron job.

Note: The rclone config file saved via the CLI is different to the one used in the FreeNAS GUI, so its safe to update this configuration file via rclone config without affecting anything you have configured in the FreeNAS GUI.

### Change Log ###

B2 Backblaze

Update(1): The log file rclone produces is not user friendly, therefore this script will also create a more user friendly log (to use as the email body). This shows the stats, and for 'sync' a list of files Copied (new), Copied (replaced existing), and Deleted - as well as any errors/notices; for 'cryptcheck' it only lists errors or notices. (see my github site for examples)29-02-2020: I've noticed that since upgrading to FreeNAS v11.3 that this script was no longer working. When you create the 'rclone.conf' file from a shell prompt (or SSH connection) with the command 'rclone config' it is stored at '/root/.config/rclone/rclone.conf' (you can check this by running the command 'rclone config file').

However, when you then run this bash script as a cron task, for some reason it is looking for the config file at '/.config/rclone/rclone.conf'; a quick fix was to copy the 'rclone.conf' file to this folder. However, it's not a good idea to have multiple copies of the 'rclone.conf' config file as this could become confusing when you make changes. Therefore I decided to update the script to utilise the '--config' parameter of rclone to point to the config file created at '/root/.config/rclone/rclone.conf'. I did log this as a bug (NAS-105088), but it was closed by IX as a script issue - however, I don't believe that is correct!

12-03-2020: I have updated the script to be dual purpose, with regard to running a 'rclone sync' to backup, and 'rclone cryptcheck' to do a verification of your cloud based files and see if any files are missing as well as confirming the checksums of all encrypted files (for the paranoid out there!). You now need to run this script with an parameter, for example: 'rclonebackup.sh sync' or 'rclonebackup.sh cryptcheck', any other parameters or no parameter will result in an error email being sent. As this reuses code dynamically it means that any configuration changes in this script are only entered once and used for both sync and cryptcheck.

Further information about cryptcheck can be found at: https://rclone.org/commands/rclone_cryptcheck/

02-04-2020: I have updated it as follows:

- Tidied up the script;

- Separated out user and system defined variables (to make editing easier);

- Have moved from multiple '--exclude' statements to using '--exclude-from' and a separate file to hold excludes, which will make managing excludes much easier and saves editing this script.

- Have changed the default email to root.

- Have added in a third parameter option 'check', which checks the files in the source and destination match.

- Rewrote the email generation aspect of the script to remove the need for the separate email_attachments.sh script - all managed within the one script now; as well as moving from 'echo' to 'printf' commands for more control.

- Tidied up the script some more;

- Have added another option to compress (gzip) the log file before being attached to the email (compressLog='yes'), this is set to 'yes' by default.

- You can also now request the script to keep a local copy (backup) of the unformatted log file (keepLog='no'), this is set to 'no' by default, as well as how many logs to keep (amountBackups=31). Keeping the log was code I used to cut-n-paste in for my debugging, but decided to leave it in as an option now.

- Have added in some checks that will verify that some of the user defined variables are valid.

Step 1

First off you need to configure rclone for Backblaze B2 as per: https://rclone.org/b2/, and if encrypting your data with rclone you then need to configure as per: https://rclone.org/crypt/.

Step 2

Download the rclonebackup.sh shell script and rclone_excludes.txt file from my github site.

Step 3

Save the two files within a dataset on your FreeNAS server.

Step 4

You now need to edit the rclonebackup.sh script, to update some of the user configurations, as per your specific requirements:

Basically the default settings above will:

src:

backup all data within your pool (/mnt/tank in my case).

dest:

store them in the root of your remote:bucket on Backblaze B2 (secret:/ in my case).

exclude_list:

The path where you have saved the 'rclone_excludes.txt' file (include the filename as well).

compressLog:

Do you want the log compressed with gzip?

keepLog:

Do you want to keep the log?

backupDestination:

Where to keep the log?

amountBackups

How many logs to keep?

email:

Your email address, for backup results and log file. Can be left as root

cfg_file:

The location of the rclone.conf file.

log_level:

for your first run I recommend this is set to NOTICE, as this will only report errors, otherwise the log file will get very large. Once you have completed your first backup, you can change this it INFO, which will let you know what files were backed-up or deleted.

B2 Cloud Storage

min_age:set a minimal age of the file before it is backed, handy for ignoring files currently in use.

transfer:

the amount of simultaneous transfer, Backblaze recommends a lot, so I have set at 16, but you can tweak this depending on your broadband connection.

You then may want to edit the rclone_excludes.txt file and and add/remove exclusions as per your requirements.

Step 4a

Just a note on other parameters used:

--fast-list:

Use recursive list if available, uses more memory but fewer Backblaze B2 Class C transactions (i.e. cheaper). Not supported by all cloud providers though.

--copy-links:

B2 Cloud

Follow symlinks and copy the pointed to item. (delete this line if not wanted)--b2-hard-delete:

Permanently delete files on remote removal, otherwise hide files. This means no version control of files on Backblaze (saves space & thus costs). This is a Backblaze B2 specific command. (delete this line if not wanted)

--exclude:

These lines will exclude certain files and folders from being backed-up. (delete this if not wanted, or edit, or add additional lines as per your needs)

Step 5

You now want to create a new cron job task via the FreeNAS GUI, remembering the command you want to run is the full path to the rclonebackup.sh script, with the parameter of sync or cryptcheck or check:

I recommend that for the first time you run this, that you do not enable the task. This is because the first run could take some time, many days if you have a few TB to backup. You do not want the server starting a new task while the old one is still running, as this will only confuse the backup process and slow down your server.

So, for your first time, just select the task and select Run Now, i.e.

Note: If the FreeNAS server reboots at any time during this first run, then you will need to restart the process by selecting Run Now again. This will re-scan the files and continue from where it last got to.

Step 6

Once the backup task has completed you should receive an email, along with a log file (of any errors encountered).

You can now edit the cron job and tick the Enabled box, so that the task will run automatically at the times you want. You may also want to edit the rclonebackup.sh script to change the log-level to INFO at this stage.

I hope this helps?

Yours

Jonathan